Validity of Exact

Validity of Exact

Validation of a psychological or educational test is not the same thing as the psychometric standardisation of a test, nor should it be confused with the reliability of a test. ‘Reliability’ generally refers to the extent to which a test can be expected to give the same results when administered on different occasions or by a different administrator, or the extent to which the components of a test give consistent results (see Online version of Exact: equivalence study). ‘Validity’ is a measure of the extent to which the test measures what it is supposed to measure (e.g. reading or spelling ability). Validity is usually established by comparing the test with some independent criterion or with a recognised test of the same ability. Inevitably, this raises the thorny issue of what is the ‘gold standard’ – i.e. which is the ‘best’ measure of any given ability against which all others should be compared? Professional opinions differ as to the merits of various tests, and consequently there are no generally agreed ‘gold standards’ for assessing reading, spelling and writing. Hence, the conventional method of establishing test validity is to show that a new test produces results that agree reasonably closely with well-established test(s) of the same ability.

Construct validity tests and results

In validating Exact, the following established tests were selected for comparison: TOWRE (Test of Word Recognition Efficiency) – a speeded test of recognition of real words and nonwords; WRAT4 (Wide Range Ability Tests) Reading and Spelling – untimed measures of single word reading and spelling accuracy; the Edinburgh Reading Test – a measure of reading comprehension ability; and the Hedderly Sentence Completion Test – a test of handwriting speed. Note that WRAT4 and TOWRE have US norms but are nevertheless widely used in assessments for exam access in the UK.

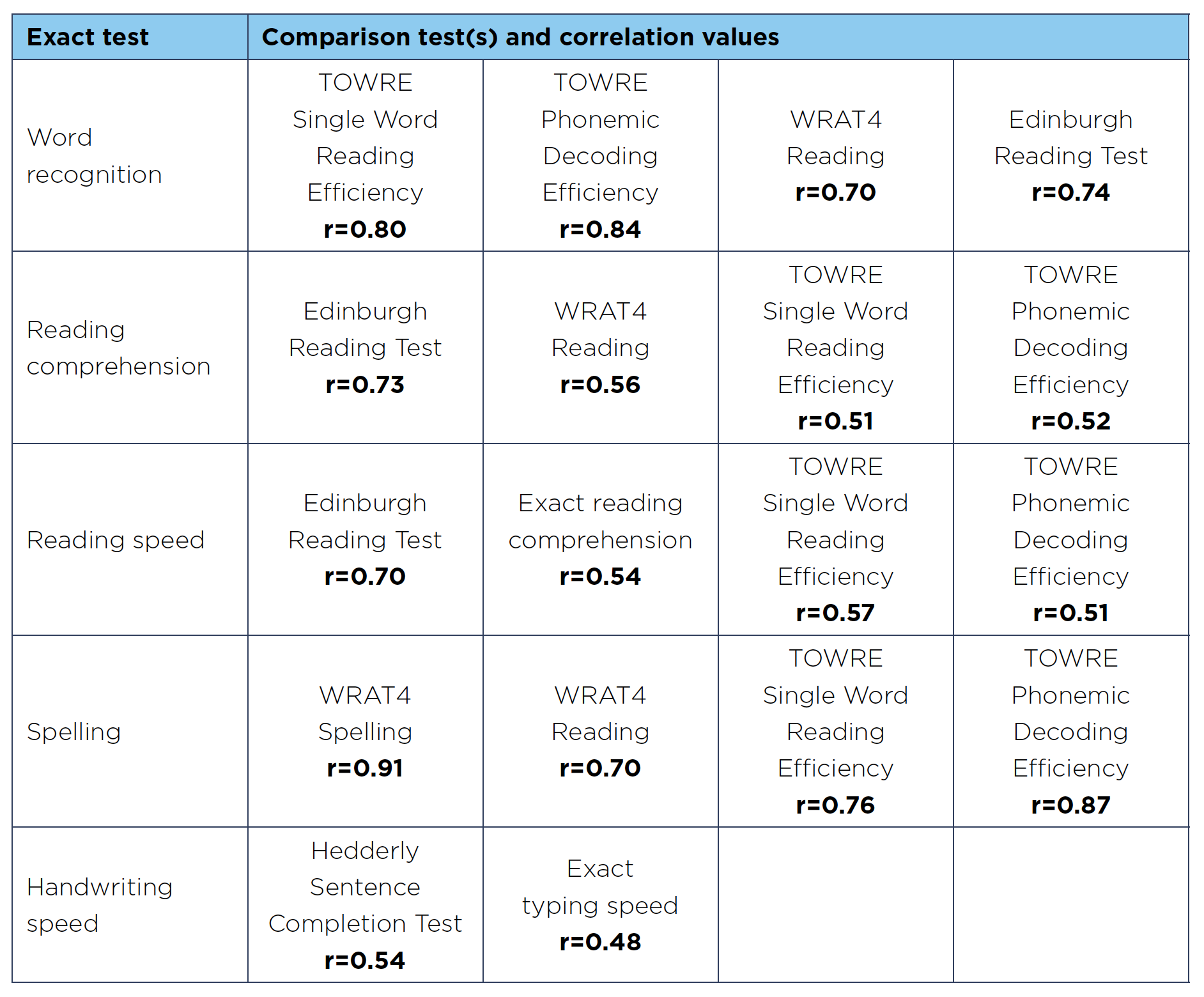

Exact has undergone separate studies with different samples for the validation studies and the standardisation study. An independent validation study of Exact was carried out in 2010-11 by Dr Joanna Horne of the Psychology Department, University of Hull, in four different schools in different parts of Britain and involved a total of 103 students. The results showed that all the tests in Exact correlate significantly (p<0.01) with equivalent conventional (pen and paper or individually administered) tests that are in regular use for exam access assessments, clearly evidencing the validity of the tests in Exact. The results are shown in Table 2. Construct validity results for the tests in Exact*.

Table 2. Construct validity results for the tests in Exact*

* All correlations are significant at the p<0.01 level.

It should be noted that the differential correlations shown in the table follow a logical pattern. The Exact word recognition test correlated more highly with the TOWRE tests than with WRAT4 Reading. This is because the TOWRE tests are speeded tests (like Exact word recognition), while WRAT4 Reading is an untimed test. Exact reading comprehension (a timed test) correlates more highly with the Edinburgh Reading Test (a test of comprehension) than it does with the measures of phonic skills and individual word recognition. Correspondingly, the Exact reading speed measure also correlates more highly with the Edinburgh Reading Test (a timed test) than with the Exact reading comprehension score, showing that reading speed and reading comprehension have been separated out more in Exact, whereas the Edinburgh Reading Test conflates the two measures. Exact spelling shows a very high correlation with WRAT4 Spelling – higher than with the various reading measures. (Note that, as might be expected, reading and spelling skills tend to be significantly related: the correlation between WRAT4 Reading and WRAT4 Spelling, for example, was found to be 0.70, the same value as between Exact spelling and WRAT4 Reading).

To give some idea of expected levels of correlation, the correlation values between WRAT4 Reading and the other comparison tests were as follows: TOWRE SWE 0.64; TOWRE PDE 0.77; Edinburgh RT 0.67. These values are, in fact, lower than the corresponding values for Exact word recognition, suggesting that Exact word recognition has somewhat better concurrent validity than WRAT4 Reading.

Validation of the Exact typing to dictation test

Exact Handwriting Speed is significantly correlated with the Hedderly Sentence Completion Test (a commonly used measure of writing speed). Since there are no comparable tests of typing speed, no validation figures are given for this component of Exact. However, an independent study of the writing and typing to dictation tests in Exact has been published, and this provides support for the validity of this test 7. This paper reports on two studies using computer-based dictation tasks for measuring speed of typing and handwriting.

In the first study, 952 students, aged 11-17 years, attending 19 different secondary schools, hand wrote and typed passages dictated by a computer. For both handwriting and typing, a very high correlation was found between speed calculated by the computer and that calculated by a human assessor, establishing that computerised calculation is a reliable, as well as convenient and timesaving method of establishing writing speed. There were greater age-related gains in speed of typing compared with handwriting, and greater variation in typing skill than handwriting skill. However, almost half of students with slow handwriting (below standard score 85) were found to have average or better typing speeds.

In the second study, 55 students aged 13-14 were administered these tasks together with the Hedderly Sentence Completion Test of handwriting speed. Despite the clear differences between the two test formats, a reasonable level of agreement was found between them. Almost one-third of students with slow handwriting in the computer-based task had not previously been identified as having support needs but would potentially be disadvantaged in written examinations. By eliminating the ‘thinking’ time involved in free writing, computerised dictation tasks give ‘purer’ measures, which can reveal physical handwriting and/or typing problems. They also simulate examination requirements more closely than mechanical repetitive tests of writing speed, and should be particularly helpful in establishing whether students need access arrangements in examinations.

7 Horne, J., Ferrier, J., Singleton, C. & Read, C. (2011) Computerised assessment of handwriting and typing speed.

Educational and Child Psychology, 28(2), 52-66.