Understanding your data

Understanding your CAT4 data

CAT4 reports contain rich assessment data. In order to interpret this data, it is important to have an understanding of the different measures shown in the reports.

The CAT4 individual report for teachers provides an in-depth analysis of each individual student's results. CAT4 provides a profile of a student's developed abilities across the four batteries to highlight strengths and areas for improvement.

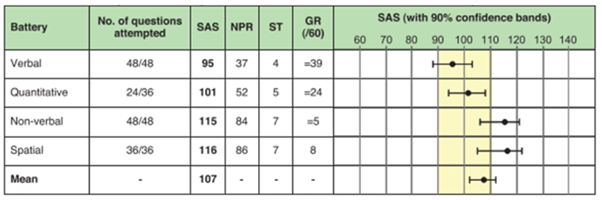

Your report will provide you with the student's Standard Age Score (SAS), National Percentile Ranking (NPR), Stanines and Group Rank (GR). The table below shows a set of example scores for an individual student.

See the Training Section of our website for more videos explaining CAT4.

- UK customers: Training section

- International customers: Training section

What is a student profile?

CAT4 is a profile of a student’s learning bias or preference based on a comparison of scores obtained on the Verbal Reasoning and Spatial Ability Batteries. What is shown may not be a preference or bias that is observed or used in the classroom. Rather it suggests an underlying bias towards learning in a particular way or a way that combines different skills, which draws on strengths demonstrated in results from CAT4.

Verbal and spatial abilities may be seen as extremes on a continuum of ability (with numerical and nonverbal abilities representing a combination of these two extremes in differing degrees). The CAT4 profile contrasts the extremes using the stanine score as the most relevant measure and factors in the level of ability displayed in each area.

Please see ‘The CAT4 Student profile’ in the Teacher Guidance for more information.

Student ability bias

Students’ results may point to an ability bias, or in many cases there will be no bias.

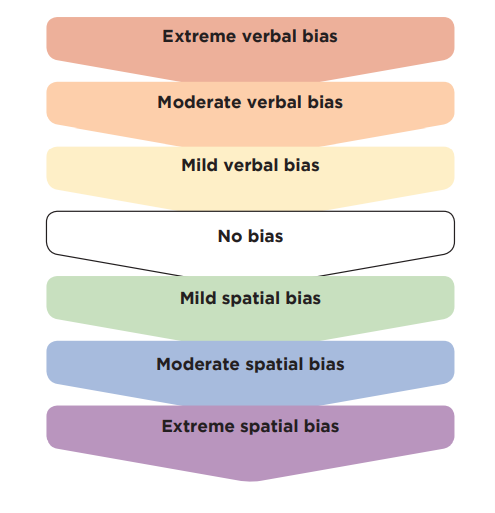

The Verbal Reasoning and Spatial Ability Batteries form the basis of this analysis and the profiles are expressed as either mild, moderate or extreme bias for verbal or spatial learning, or, where no bias is discernible (that is, when the scores from both batteries are similar), an even profile across the two batteries.

Student biases

The profile for each student will be in one of the following seven categories: